Reference Domains Part IV: Metadata & Governance

September 13, 2011 1 Comment

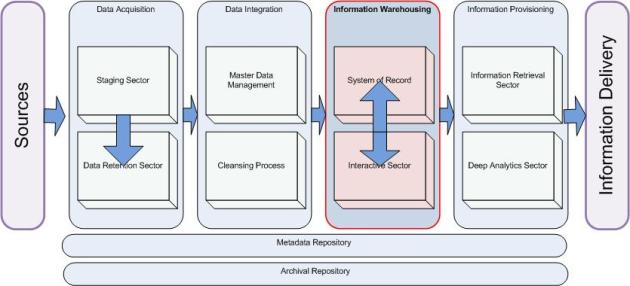

This is the fourth and final part in the series on working with reference domains, also called classifications. The first part provided an overview of their nature, the second recommended an approach to data modelling, and the third explored collecting and documenting them. Here we will discuss metadata related to classifications and how it can be used to assist the governance of content, with particular reference to data quality.

Profiling

Classifications will first be encountered through the analysis process. As the reference domain is identified and the master source of the full list of codes and descriptions is found, it is possible to compare this data against profile results to determine the integrity of the data. Imagine that the field under investigation is the marital status of an individual. The master source reveals that the full list of codes and descriptions include: 1=Married, 2=Single, 3=Divorced. The table-level profile shows that the minimum value is “1”, while the maximum value is “4”. With the profile output stored as metadata, and the classifications loaded into reference tables, it is possible to automatically test that the actual values found in the source are within the range expected in the reference tables.

Similarly, a more detailed test could be run at the column-level, with the frequency distribution output compared against the reference values to check that no aberrant values appear.

Aberrant values could be a sign of integrity issues, or may indicate that additional values need to be added to the reference tables.

In order to make full use of this comparison of reference domain values and profiling results, it is important to collect the external classifications as part of the analysis process. This will allow the team to catch anomalies early and avoid rework.

Data Content Governance

For external classifications there may well be decisions to be made around the collection and consolidation of reference domains. A protocol should be developed to address any issues with inconsistent domains of values. Multiple domains will need to be rationalized into a single set of values that will be acceptable to all lines of business. Care needs to be taken to ensure the most authoritative source has been identified, and that a process is in place to handle change notification. This is particularly important in situations where the source is a hard-coded list drawn from documentation.

Naming Standards Governance

For internal classifications, the content is not subject to content governance so much as the enforcement of naming standards. This is especially important in the naming of relationships, to ensure the nature of the relationship is being accurately described. The time to do this is as the logical mapping document is passed through the screening process to govern all logical names.

Data Architecture Governance

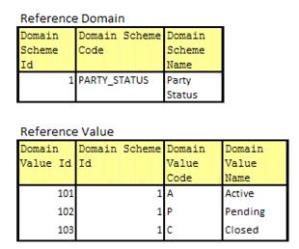

The vast majority of reference domains should pose no challenge to data architecture governance. Most data elements will fit neatly into the simple structures of the Reference Domain and Reference Value tables described in part three of this series. There may be a decision to house long lists of values in separate tables; setting a threshold as assessment criteria. For example, if a classification contained more than 500 values, it would be held in its own reference table. This would be done to help access performance, although it may not be required, and should be tested to determine suitability. If a threshold is used to influence design, the profile results can again be used to programmatically assist the design process.

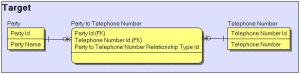

Likewise, there may be a call to create special structures for classifications that have unique attribution or particular structures. For instance, a set of classifications may form a balanced tree hierarchy that could be usefully held in denormalized structures. Again, these exceptions should be rare; and I would suggest they be avoided, with a premium placed on consistency of design.

Model validation should ensure that the length of the source fields is accommodated by the target reference tables. The table profile results can be referenced to make this determination automatically.

This completes the series on reference domains. Please feel free to provide your feedback. What challenges have you faced with classifications? How did you resolve them?